A report by Expert Forum on online manipulation in the Republic of Moldova reveals an alarming reality. From artificially generated fake accounts to coordinated networks creating the illusion of organic posting behavior, the report presents a complete anatomy of an information war ahead of the parliamentary elections on September 28.

Behind this manipulation lies a systematic campaign designed to create constant anti-EU anxiety about external threats, war, and loss of sovereignty. The analysis reveals that fear-based messages dominate the narrative landscape, with coordinated accounts spreading alarmist stories about forced integration and foreign control, according to the authors.

Titled "Analysis of Coordinated Inauthentic Behavior in Moldova: 23 Days Before Elections", the report is based on the analysis of 3,392 videos, 36,338 comments, and 100 identified fake accounts.

Undecided Voters Targeted

Romania's case has shown the domino effect of digital manipulation during electoral periods. We observe a worldwide dramatic increase in "late deciding voters" – voters who make their decision at the last moment, influenced by what they see on screens in the final weeks. The battle for people's attention and the struggle to occupy as much screen time is no longer just a political marketing strategy; we are witnessing a direct race for controlling the attention of voters who spend a lot of time online, as mentioned in the report.

In this tense geopolitical context, the content circulating a month before elections becomes an extremely effective tool for persuasion and manipulation of public perception.

Romania's experience, with the rapid rise of a presumed far-right independent candidate through inauthentic accounts, thus through a digitally orchestrated campaign, demonstrates that we cannot underestimate the power of these influencing tools, even though we must not minimize the importance of socio-economic factors that create fertile ground for these messages.

The report analyzed the last 30 days of trending content in the Republic of Moldova to understand what real-time information warfare looks like.

Trending in the context of this analysis means content in the top 100 on monitored hashtags, generally with over 5,000 views. CIB (Coordinated Inauthentic Behavior) refers to inauthentic content, where coordination can be observed in the monitored themes, recycled videos, and distribution patterns, without necessarily being identical in technical details.

Evolution of Threat: From Troll Farms to AI

What we observe today is fundamentally different from classic disinformation campaigns, the report authors state. These traditional campaigns are becoming increasingly rare and replaced by something much easier. AI. Technologies like ChatGPT have dramatically accelerated the pollution of feeds with artificial content that mimics human behavior.

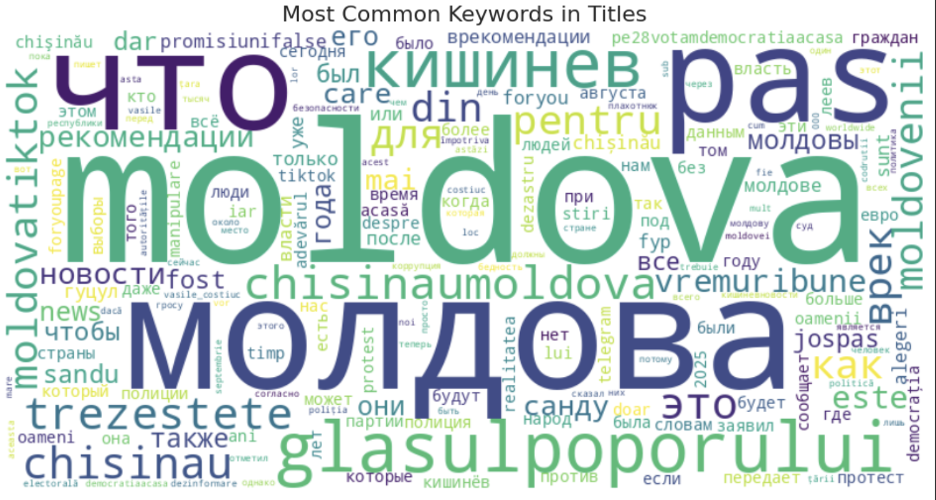

The analysis revealed a massive focus on regional political terms directly reflecting geopolitical pressures.

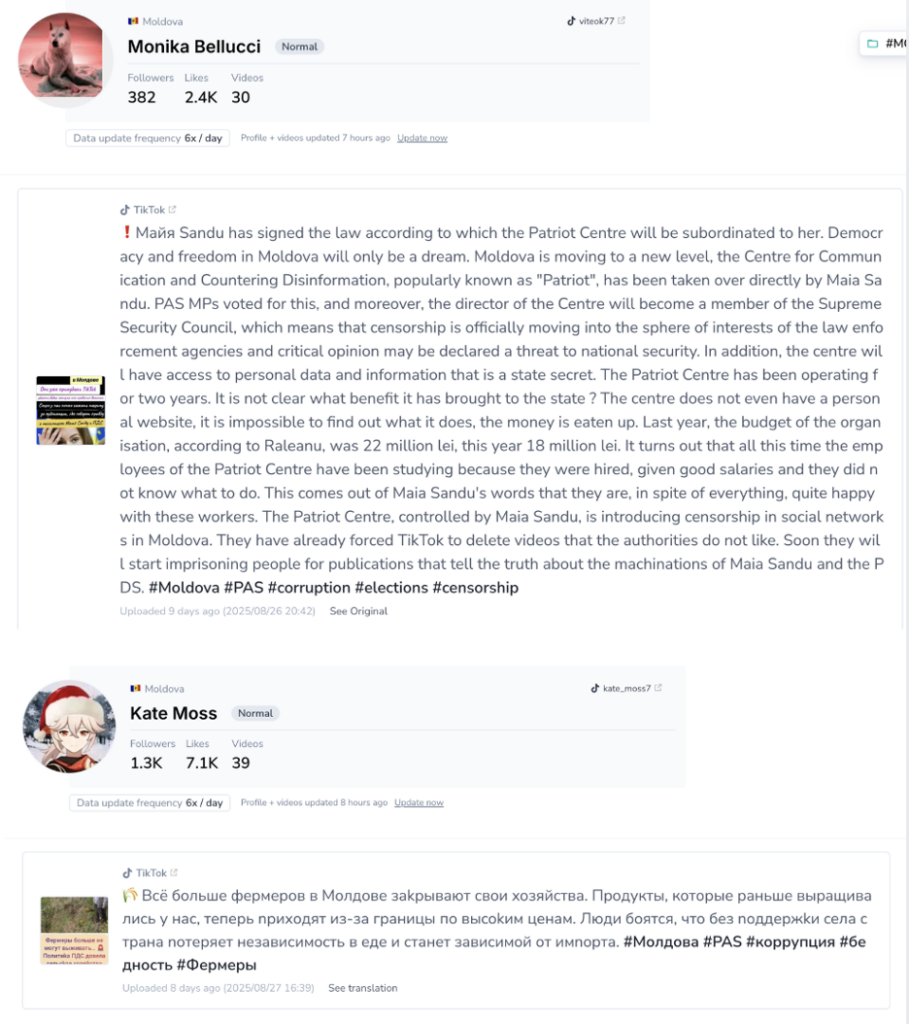

A relevant trend is the use of small accounts, with a few hundred followers, each having 1-2 videos with a staggering number of views – 30,000, 100,000 – completely disproportionate to the account size and other interactions.

These artificial accounts are much easier to create than traditional troll farms, requiring minimal investment for giant results. AI can generate complete profiles, realistic photos, credible biographies, varied content in minutes, which would have required weeks of manual work.

The danger for social media is that these accounts are becoming increasingly difficult to detect, mimicking human behavior and evolving constantly to evade automated detection systems.

In the electoral context of Moldova, this technology transforms misinformation from a tactic into a weapon with the potential to destabilize the flow of information, especially as we approach the day of the vote.

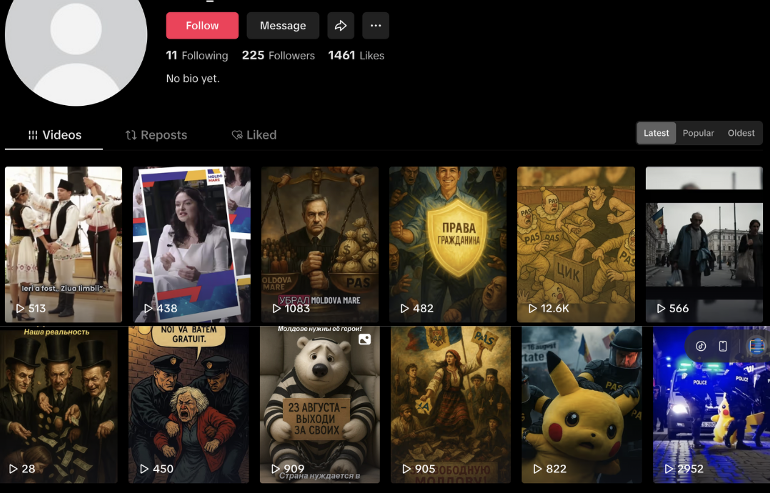

"Lover Boy" Tactic, "Lover Woman" Variation

A technique identified in the digital field in Moldova is a classic psychological strategy for false profiles/avatars that exploit visual appeal to increase interest in political messages.

These accounts heavily use generic (stock) photos or stolen/recycled images from real people, accounts purchased from authentic users, or other tactics to hijack digital identities.

The result is an army of accounts with photos of stereotypically beautiful and attractive women (according to conventional standards) promoting CIB behavior through coordinated political propaganda.

This tactic is not random - it exploits fundamental cognitive biases. In the electoral context of Moldova, this manipulation becomes an information warfare weapon: propagandists know that an anti-EU or pro-Russian message will be more favorably received if it comes from a "beautiful young Moldovan woman" than from an anonymous or male account.

Digital Work Division

The analysis identified an infrastructure with various types of accounts, each with specific roles in the manipulation ecosystem.

"Fan accounts" serve exclusively as creators of political propaganda, while other accounts are responsible for leaving copy-paste or similar comments.

In practice, there is a division of digital work: some accounts serve exclusively as "posters" (content creators), others focus only on comments, views, and artificial engagement, while others operate solely for following and amplification.

Many of these accounts overlap and serve multiple functions simultaneously.

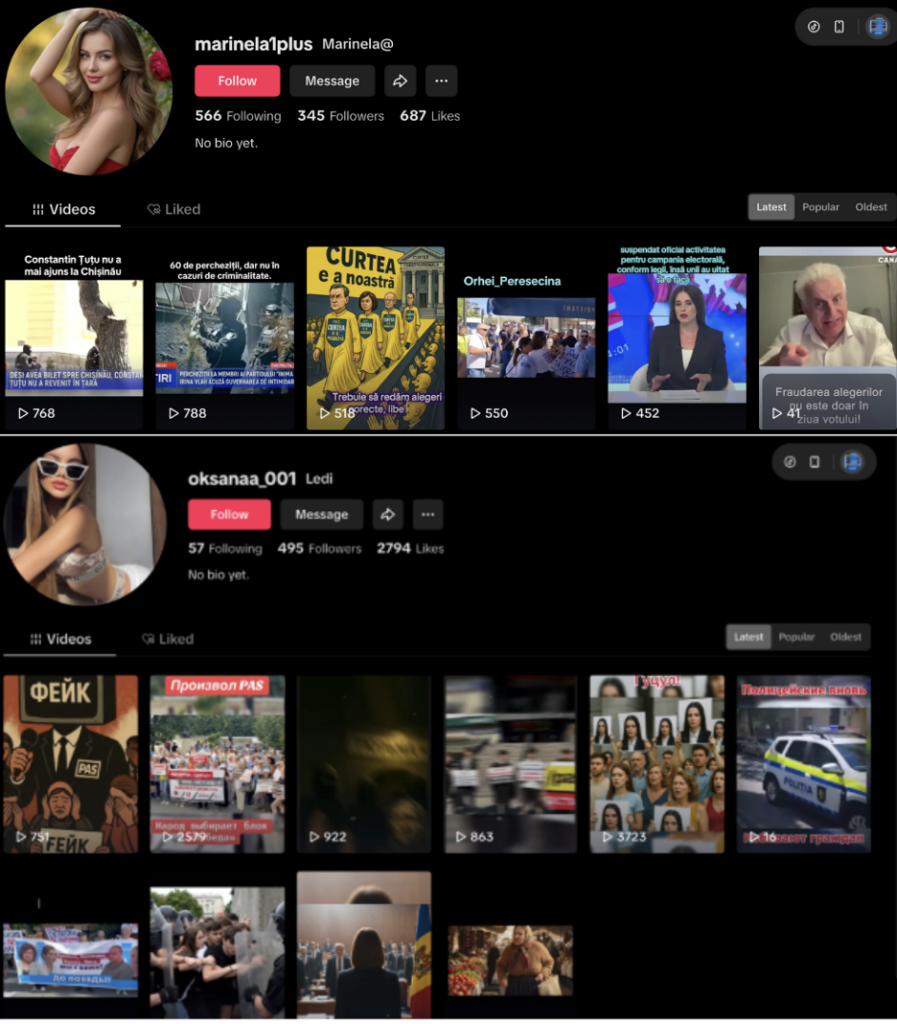

"Aunt with Flowers" Technique

Another relevant technique, called "aunt with flowers," includes accounts that create persona-type avatars, just like in commercial marketing where you target your audience by building representative fictional personas.

Their goal to be as diversified as possible is evident: to be easily empathized with. Although their behavior is exclusively political propaganda, adding a touch of authenticity when you put profile pictures with flowers that every Eastern European aunt or mother has had at some point.

Accounts referencing celebrity names have also been identified. It may seem comical that someone is named "Monika Bellucci," but this technique can create a subconscious familiarity with the terms.

From Anti-Sandu to Fear Mongering

The visual representation of words from duplicate comments shows a focus on the terms "pas", "down", "maia", "sandu", and "жos" (down). These terms appear frequently in the set of 10,196 identified duplicate comments out of the total 36,338 analyzed comments.

The analysis of content categories reveals a three-tier strategy:

Topic: National politicians focus on demonizing Maia Sandu and promoting politicians like Victoria Furtună and Plahotniuc.

- Topic: Local politicians exploit ethnic tensions with Gagauzia (especially regarding Evghenia Guțul's conviction).

- Topic: News promotes alarmist news about external threats, electoral interference, the danger posed by the EU, and the forced confrontation of NATO with Russia.

- Topic: Political parties mainly contain anti-PAS content.

This distribution combines personal attacks with the exploitation of regional tensions and the creation of generalized panic.

What Can Be Done

Similar to monitoring in Romania, urgent attention must be focused on accounts with coordinated inauthentic behavior.

The paradox we find ourselves in is that platforms invoke the right to freedom of expression to protect bots attacking the democratic system at a crucial moment for a country's future. However, the paradigm must radically change.

Official and real accounts of political actors must be protected, but urgent intervention should target the infrastructure of artificial amplification, these networks of inauthentic accounts and "soldiers" of virality that manipulate algorithms. Only by exposing and neutralizing these systems can we maintain platforms as authentic reflections of society (if we are not far from this ideal world), not as tools of hybrid warfare against democracy, concludes the report.

T.D.